The SSH protocol and SSH Applications - Part 5

SSH and SSH Applications from a Security Gateway Perspective

Part 5: Git via SSH from a Gateway Perspective

In the previous parts of this series, we explored the SSH protocol and how files transfer via both the SCP and SFTP protocol. Those protocols are a natural center for a gateway that shall run a content security policy when sensitive files are uploaded or downloaded. When we consider the most sensitive files for an organization, program source code will be very much at the top of the list. Developers working with GitHub to manage the source code are also often using the SSH protocol to pull and push files and Git is using its own proprietary protocol on top of SSH controlling those file transfers. In this article, we take a closer look at the Git protocol. We will explore where challenges arise when inspecting or filtering the exchanged packets (especially the importance of the packfile parsing) and how to approach these effectively during development. Understanding Git's packet-level protocol is a crucial step toward building secure and protocol-aware systems that go beyond basic file transfer interception.

Git Protocol over SSH Basics: How Data Flows

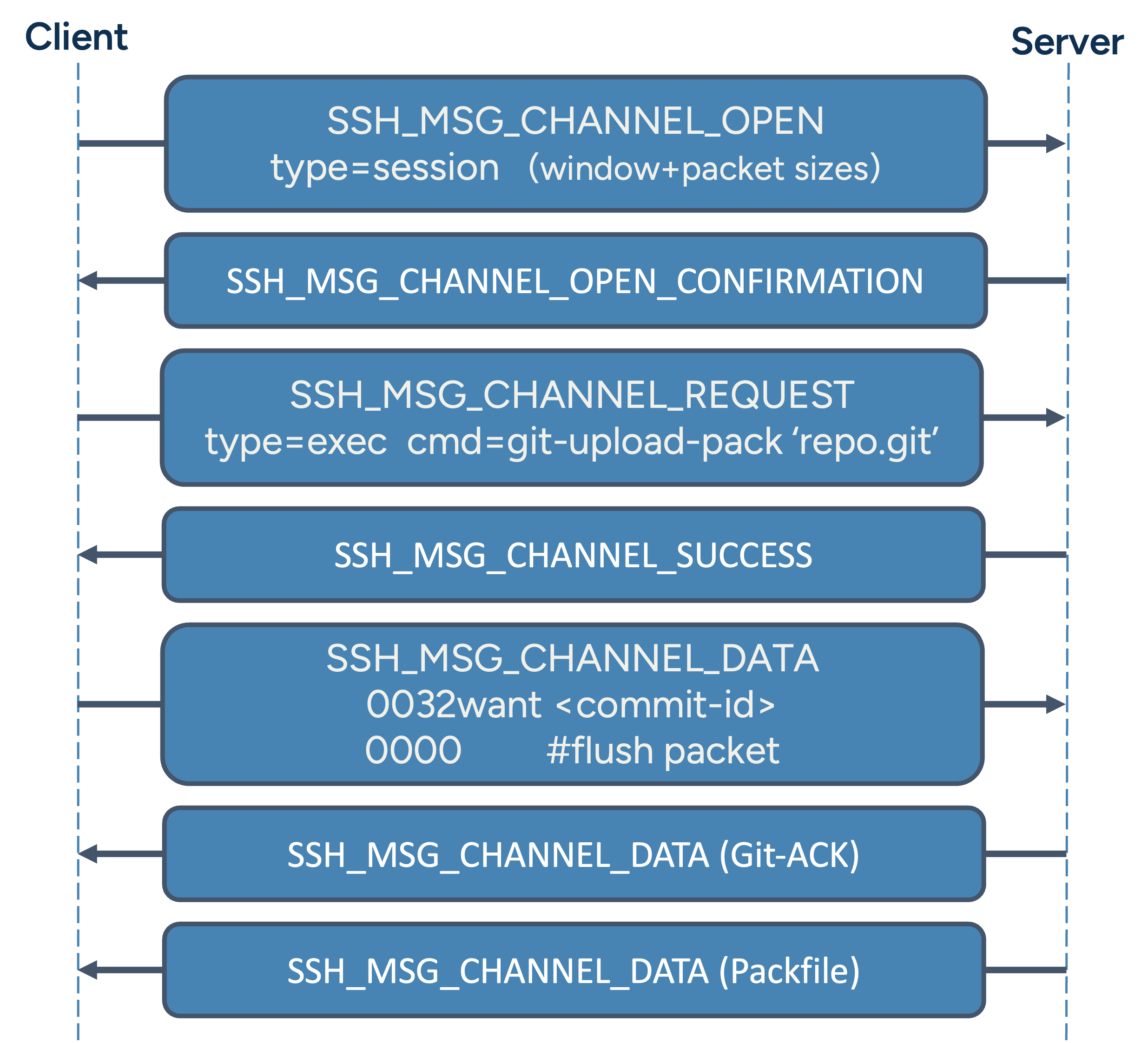

Git supports multiple transport layers including HTTP(S), SSH and its native protocol over TCP. When Git is used over SSH, the actual Git protocol runs on top of an SSH channel. When running a command like git clone git@host:repo.git, the local Git client actually uses a command-based invocation like: ssh git@host git-upload-pack 'repo.git'

This means that the Git client opens an SSH session and executes a server-side Git command (git-upload-pack, git-receive-pack or git-upload-archive). From that point, Git protocol communication happens directly over the SSH data stream, without any additional protocol layer.

This behavior makes it relatively straightforward for a gateway to detect Git invocations over the SSH protocol. From its perspective and in order to inspect what is actually pushed and pulled, the interesting part begins once the actual packfile is streamed. By then, the client and server have negotiated which objects are needed and the sending side then transmits them as a compressed binary stream.

Framing Git Messages: The Pkt-Line Format

Before a packfilfe is ever sent, Git clients and servers communicate using a custom line-based protocol called pkt-line. Every message sent within SSH_MSG_CHANNEL_DATA messages is prefixed with a 4-byte ASCII-encoded hexadecimal number indicating the total length of the packet (including the 4 bytes).

For example, the line 0032want 2c0dedcafefeedbeef multi_ack thin-pack side-band-64k begins with 0032, meaning the full message is 50 bytes long (hex 0x32).

Overview of the SSH and Git protocol phases involved in a typical git clone.

Special packets like 0000 are used as flush markers to indicate the end of a section. This format makes the protocol easy to parse on a stream level and allows for basic validation of message integrity in gateways.

In a gateway implementation, this framing is useful as it allows base-level inspection and control: messages can be split, verified or buffered cleanly before interpreting their payloads.

Anatomy of a Git Packfile

A packfile (.pack) is Git's way of efficiently bundling and transferring repository data. It's transferred in pkt-line format as every other Git protocol packet and contains multiple Git objects (e.g. blobs or commits), often delta-compressed to save space. A packfile has the following structure:

- A header: Consists of 4-byte signature ('PACK'), 4-byte version number (usually 2) and 4-byte object count

- A sequence of objects, each with:

- Type (commit, blob, etc.)

- Size (encoded as a variable-length integer)

- Optional delta reference (offset)

- Compressed content

- A trailer: SHA-1 or SHA-256 checksum of the entire stream

# Packfile Example from Gateway Perspective:

0033 → pkt-line header (length: 51 bytes total)

01 → control code byte

50 41 43 4b → "PACK" (Packfile header start)

00 00 00 02 → Git Protocol version = 2

00 00 00 01 → Packfile contains 1 object

36 → Object Header: type = BLOB, size = 6

78 9c cb 48 cd c9 c9 07 00 06 2c 02 15

→ zlib compressed content: "Hello\n"

[20-byte checksum] → e.g. SHA-1 checksum of stream

For a gateway, parsing this format is essential in order to analyze, filter or modify the data in transit. GitHub's packfile documentation offers a much deeper insight here, but we will take a more superficial look at the implementation while trying to point out the most important challenges.

Encoded Integers in Git: Variable-Length Formats

One common challenge when decoding Git streams is handling variable-length integers. Git uses a compact encoding similar to base-128 "varints", where the most significant bit (MSB) of each byte indicates continuation.

This format appears in:

- The object size field in packfiles

- Delta instruction lengths

- Offset references in ofs-delta entries

# Example:

Byte 1: 10001100 → MSB set → continuation

Byte 2: 00000010 → MSB not set → end

Combining these bytes gives us 00000001 00001100, which is then intrepreted as an unsigned integer.

The correct interpretation of these integers is crucial, especially when filtering packfile content on the fly. Git doesn't necessarily make it easier too - it mixes both little-endian and big-endian encodings in different contexts, so special care must be taken to correctly interpret each value according to its format.

Delta Objects: Resolving and Reconstructing Data

Delta compression is at the core of Git's efficiency. Instead of transmitting full objects, Git often sends differences (deltas) against known base objects in its packfiles to save bandwidth.

There are two types:

- ofs-delta: references an earlier object by offset

- ref-delta: references a base object (outside the packfile) by SHA-1

A delta contains:

- The size of the base and result objects

- A sequence of instructions, e.g.:

- COPY: copy bytes from base

- INSERT: insert new bytes

Parsing and interpreting delta objects within the packfile plays a crucial role for a gateway. In order allow filtering the contents of the repository properly, the file contents must first be restored as completely as possible. This initially works well enough for ofs-delta objects, as they reference objects in the same packfile. This means that the “direct environment” of these can be resolved. But what happens if an ofs-delta object points to a ref-delta object or if this is parsed directly? In this case, the reference is outside the packfile. In order to resolve this, the referenced objects would have to be cached in such a way that they can be reused in a later connection. However, to ensure that the local information is also up-to-date and synchronized with the remote repository, the gateway would theoretically have to execute a pull request to remote. Whether the resulting complexity and introduced delay is really desired needs to be weighed against the importance of the security policy to be enforced.

Alternatively, the less complex option results in missing information in the parsing process. The non-resolvable characters must therefore be substituted and the policy needs to accept fragmented file content in the case of ref-delta objects.

Interpreting Git Data in a Gateway Context

Gateways that need to inspect and Git traffic must go beyond packet boundaries and understand semantic structures inside the stream. This means:

- Parsing binary, compressed streams

- Interpreting different object types individually

- Understanding which files are affected

- Understanding if restricted data is being transferred

As described above, the resolution of deltas to inspect a file's full content is the cause of a large number of possible buffers, delays and other required requests. Many of the complete solutions place a greater burden on resources and bandwidth. For this reason, compromise solutions must be found that offer an acceptable trade-off between performance and security.

Our SSH Gateway offers a fast and secure solution that reassembles files from their scope in the best possible way and then filters them using a customizable policy.

What We've Learned About Dealing with Git Traffic

Understanding Git's transport and packfile mechanisms is key for anyone building tools that sit in the middle of Git traffic - especially gateways that aim to inspect, filter, or enforce rules on repository data. While the protocol places a lot of emphasis on efficiency and is well optimized, its use of delta objects, different variable-length encodings and compressed streaming require careful handling.

With the right abstractions and a solid parser, however, even complex binary Git instructions become manageable and open the door for powerful filtering tools at the transport level and eventually allow to control some of the most sensitive data of an organization during transit.

Attractive user stories that can now be implemented is:

- to allow developers broad access to all repositories, enable inner source models and searching in existing code, while preventing that an engineer is downloading more data than appropriate

- preventing uploads of secrets such as login credentials and private keys into a repository before the data even reaches GitHub

The articles in this series

- The SSH Core Protocol and the SSH Remote Command

- SSH Remote Shell from a Gateway Perspective

- SCP from a Gateway Perspective

- SFTP from a Gateway Perspective

- Git via SSH from a Gateway Perspective [this article]

- TCP Tunnels via SSH from a Gateway Perspective